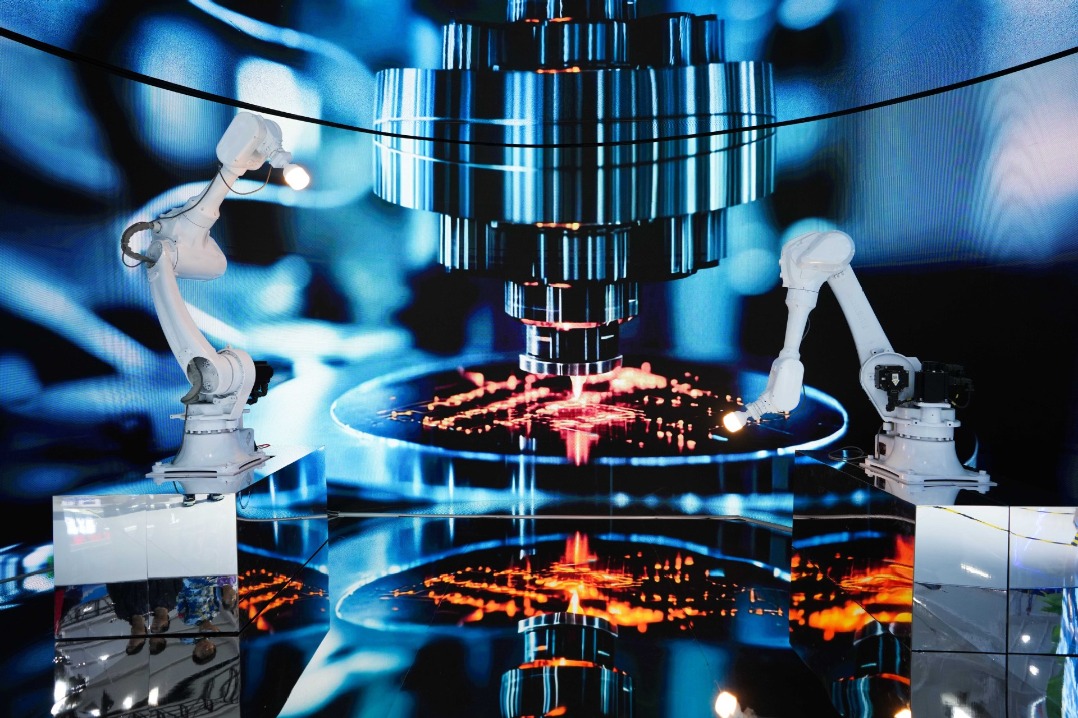

Baidu open-sources Ernie 4.5 multimodal AI model

Chinese tech heavyweight Baidu Inc open-sourced its multimodal large language model Ernie 4.5 series on Monday, consisting of 10 distinct variants, as part of its broader push to bolster advancement in AI technology.

The model family includes mixture-of-experts (MoE) models with 47 billion and 3 billion parameters, the largest model having 424 billion parameters, alongside a 0.3 billion dense model.

Baidu said the MoE architecture has the advantages of enhanced multimodal understanding and improved performance on text-related tasks. All models are trained with optimal efficiency using the PaddlePaddle deep learning framework, which enables high-performance inference and streamlined deployment.

Experimental results show that the models achieve state-of-the-art performance across multiple text and multimodal benchmarks, particularly in instruction following, knowledge memorization, visual understanding and multimodal reasoning.

The Ernie 4.5, which was launched in March, is Baidu's multimodal foundation model, capable of understanding and generating text, images, audio and video content based on prompts.